Myth 1: Flow data is sampled and highly inaccurate

This statement is actually correct for sFlow or NetFlow Lite standards supported on obsolete devices used by SMB customers. All the major enterprise network equipment vendors provide routers and switches capable of exporting non-sampled and highly accurate traffic statistics. Recently, the general availability of flow data has exploded. All the major firewall vendors enable flow export, with virtualization platforms providing flow visibility. Even cost efficient devices, such as Mikrotik routers, can supply accurate statistics.

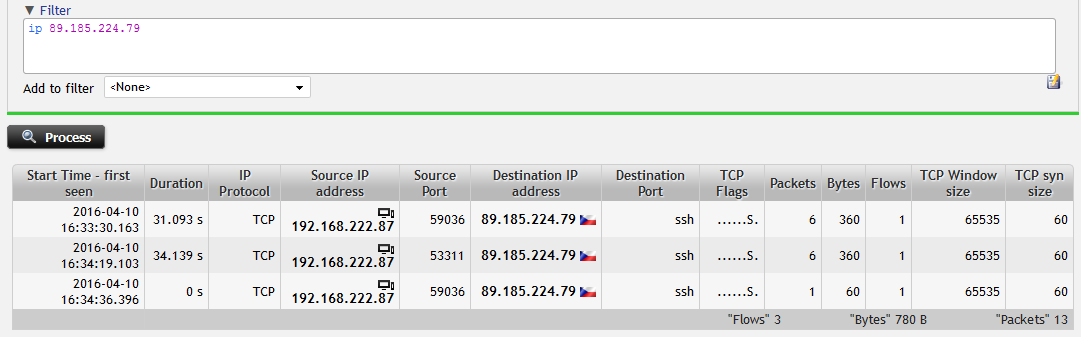

A professional analysis tool, the so-called collector, visualizes this data to show east-west traffic in your network, enabling you to understand the utilization of individual uplinks in your locations. However, the most important value is in leveraging flow for network incident troubleshooting. Let’s explore an example. A user is experiencing trouble when connecting to a server via an SSH service. Having flow data in place we can easily track that there was no response from the server and we’ve successfully excluded many potential root causes, such as server or network downtime or poor configuration. The range of causes has been narrowed down to the service not running, or the communication is blocked by a firewall, tremendously reducing the Mean-Time-To-Resolve.

Figure 1: Filtering user's communication with external service. Host is not receiving any response.

Myth 2: Flow is limited to L3/L4 visibility

That’s the original design. Flow data represents a single packet flow in the network with the same identification 5-tuple composed of source IP address, destination IP address, source port, destination port and protocol. Based on this, packets are aggregated into flow records that accumulate the amount of transferred data, the number of packets and other information from the network and transport layer. However, more than five years ago at Flowmon, we came up with the concept of flow data enriched with information from the application layer. This concept has recently been widely adopted by many other vendors. So, having detailed visibility into application protocols, such as HTTP, DNS and DHCP is no issue these days. In fact, this moves troubleshooting use cases to a completely different level.

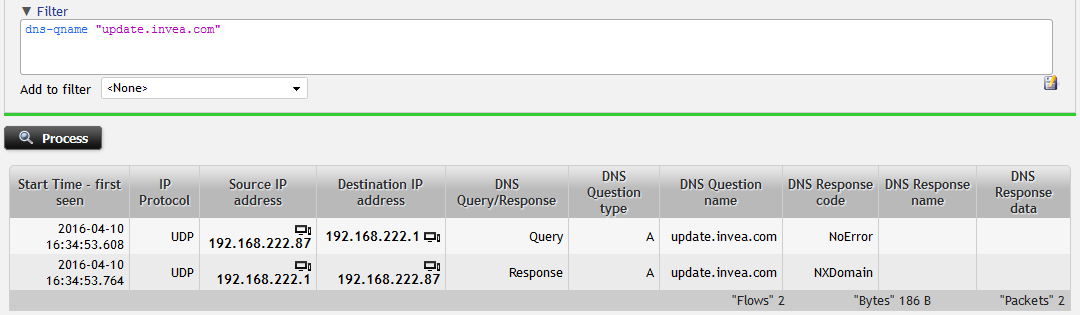

Again, let’s see an example. A user is complaining about an unresponsive service. By analysing traditional flow data we can see the traffic pattern and details about individual connections established by the user’s computer. Nothing seems to be wrong but we cannot see any traffic towards the service. Having extended visibility - provided by Flowmon’s enriched flow data engine - in place we can easily troubleshoot why. The requested service name is not properly configured in DNS service and returns “NXDOMAIN”, which indicates that the requested domain name does not exist and the corresponding IP address cannot be provided. Therefore, no session is established.

Figure 2: Filtering in DNS traffic – searching for NXDomain DNS Response Code.

Myth 3: Flow data misses network performance metrics

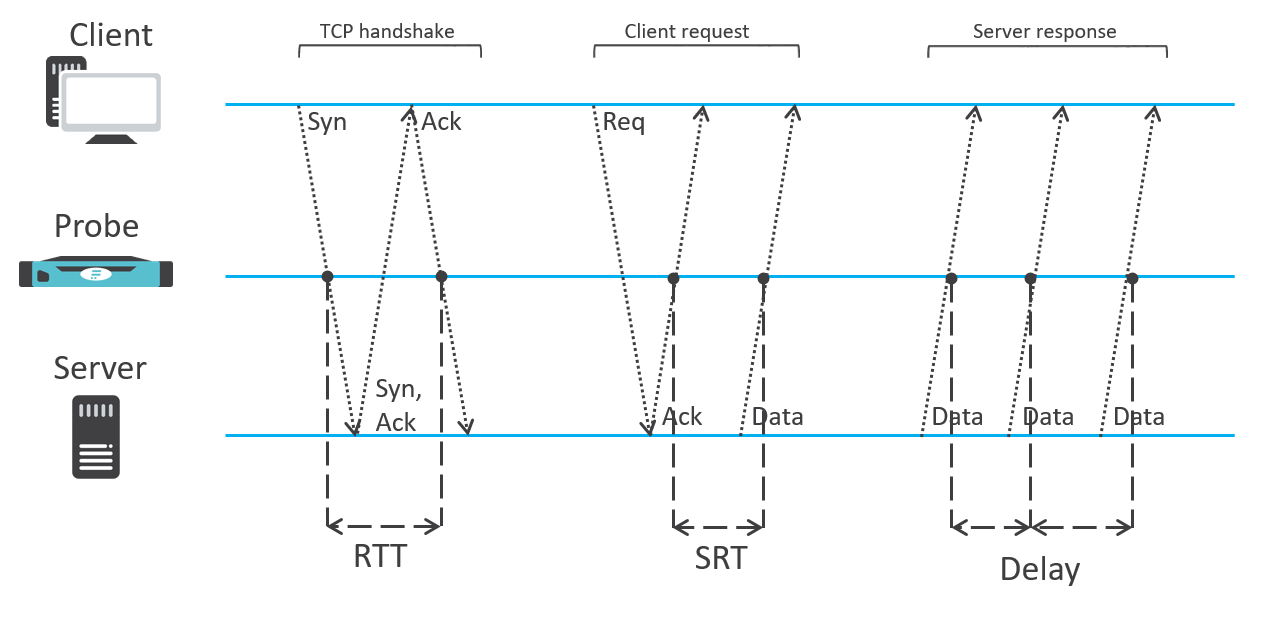

So far we have talked about troubleshooting. Another piece of the puzzle is network performance monitoring. Network monitoring is not provided exclusively by packet capture tools. Network performance metrics can be easily extracted from the packet data and exported as part of the flow statistics. Performance indicators like RTT (round trip time), SRT (server response time), jitter or number of retransmissions are available transparently for all the network traffic, regardless of the application protocol. Given its benefits, such an approach has also been adopted by vendors such as Cisco, which provides these metrics as part of their AVC (application visibility and control) ART (application response time) extension. This means that you can measure performance of all applications operated on the premises, in a private cloud as well as in a public cloud environment. To better understand how these metrics are extracted, we provide an example of Flowmon Probe providing performance monitoring.

Figure 3: NPM metrics measurement principle.

Myth 4: Flow is not a comprehensive tool for network performance and diagnostics (NPMD)

According to Gartner, the main purpose of NPMD tools is to provide performance metrics by leveraging full packet data and capability to investigate network issues by analyzing full packet traces. But do we really need the packet capture solution? No. Enriched flow data provides accurate traffic statistics, visibility into L7 (application protocols) and network performance metrics, so it is fully capable of NPMD use cases.

Actually, with the rise of encrypted traffic, heterogeneous environments and an increase in network speeds, it is inevitable that flow will become a predominant approach in the NPMD field. Especially the bandwidth explosion strongly challenges the legacy packet solutions. Let’s look at a simple example. A network backbone of 10G capacity will require up to 108TB of data storage to keep track of all the network traffic for a period of 24 hours. This is a massive amount of data that you need to collect, store and analyse, which makes the whole process extremely expensive.

In fact you will need just a fraction of this data. Flow data in the same situation will require something around 250GB capacity, which enables you to keep 30 days of history on a collector equipped with 8TB storage capacity. So, by leveraging flow data you can keep your network operations tuned up, as well as troubleshooting network related issues for a fraction of resources needed.

So, what if you need to troubleshoot a very specific issue on an unsupported application protocol where there is no visibility in flow data? In such a case you need a packet capture based tool, right? In fact, not really. Having a Probe in place means that you have access to full packet data anyway. So, instead of just extracting all the metadata from the packets, you instruct the Probe to run a packet capture task. This task will be time limited and strictly focused on recording packets relevant to your investigation. So, on-demand selective packet capture is easy to handle even on a multi 10G environment, without the need of massive storage capacity.

Flow data for future dynamics

Flow data is no longer a toy available only in a few limited use cases. Technology Enriched flow data has matured so much that it has become a modern tool, providing sufficient granularity for solving network incidents, configuration issues, capacity planning, and much more. Compared to continuous packet capture tools they additionally dispose with unmatched scalability, flexibility and ease of use. As a result, flow data then saves time, reduces MTTR and the total cost of network operations. Flow data is not limited to network operations, performance monitoring and troubleshooting. It is a basis for network behaviour analysis, able to detect and report on indicators of compromise, lateral movement, APTs, network attacks, etc.

It is no coincidence that reputable agencies in recent years have observed the gradual abandonment of packet capture technology and its replacement with flow. Flow data is making its way into the corporate environment and with continuous adoption of cloud, IoT, SDN and the ubiquitous bandwidth explosion, this trend will only continue.